AI needs doctors. Big pharma is taking an AI-first approach. Apple is revolutionizing clinical studies. We look at the top artificial intelligence trends reshaping healthcare.

Healthcare is emerging as a prominent area for AI research and applications.

And nearly every area across the industry will be impacted by the technology’s rise.

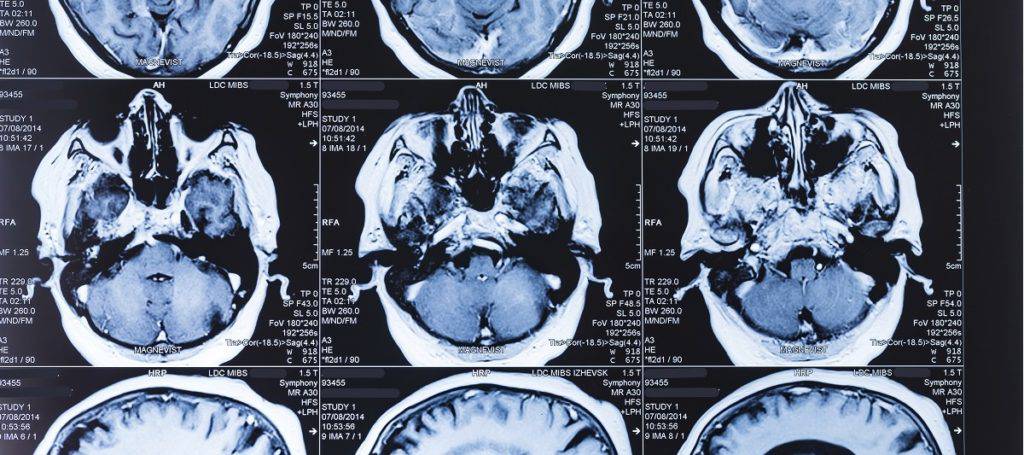

Image recognition, for example, is revolutionizing diagnostics. Recently, Google DeepMind’s neural networks matched the accuracy of medical experts in diagnosing 50 sight-threatening eye diseases.

Even pharma companies are experimenting with deep learning to design new drugs. For example, Merck partnered with startup Atomwise and GlaxoSmithKline is partnering with Insilico Medicine.

In the private market, healthcare AI startups have raised $4.3B across 576 deals since 2013, topping all other industries in AI deal activity.

AI in healthcare is currently geared towards improving patient outcomes, aligning the interests of various stakeholders, and reducing healthcare costs.

One of the biggest hurdles for artificial intelligence in healthcare will be overcoming inertia to overhaul current processes that no longer work, and experimenting with emerging technologies.

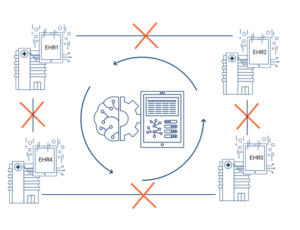

AI faces both technical and feasibility challenges that are unique to the healthcare industry. For example, there’s no standard format or central repository of patient data in the United States.

When patient files are faxed, emailed as unreadable PDFs, or sent as images of handwritten notes, extracting information poses a unique challenge for AI.

But big tech companies like Apple have an edge here, especially in onboarding a large network of partners, including healthcare providers and EHR vendors.

Generating new sources of data and putting EHR data in the hands of patients — as Apple is doing with ResearchKit and CareKit — promises to be revolutionary for clinical studies.

In our first industry AI deep dive, we use the CB Insights database to unearth trends that are transforming the healthcare industry.

Rise of AI-as-a-medical-device

The FDA is fast-tracking approvals of artificial intelligence software for clinical imaging & diagnostics.

In April, the FDA approved AI software that screens patients for diabetic retinopathy without the need for a second opinion from an expert.

It was given a “breakthrough device designation” to expedite the process of bringing the product to market.

The software, IDx-DR, was able to correctly identify patients with “more than mild diabetic retinopathy” 87.4% of the time, and identify those who did not have it 89.5% of the time.

IDx is one of the many AI software products approved by the FDA for clinical commercial applications in recent months.

Viz.ai was approved to analyze CT scans and notify healthcare providers of potential strokes in patients. Post FDA-approval, Viz.ai closed a $21M Series A round from Google Ventures and Kleiner Perkins Caufield & Byers.

GE Ventures-backed startup Arterys was FDA-approved last year for analyzing cardiac images with its cloud AI platform. This year, the FDA cleared its liver and lung AI lesion spotting software for cancer diagnostics.

Fast-track regulatory approval opens up new commercial pathways for over 70 AI imaging & diagnostics companies that have raised equity financing since 2013, accounting for a total of 119 deals.

The FDA is focused on clearly defining and regulating “software-as-a-medical-device,” especially in the light of recent rapid advances in AI.

It now wants to apply the “pre-cert” approach — a program it piloted in January — to AI.

This will allow companies to make “minor changes to its devices without having to make submissions each time.” The FDA added that aspects of its regulatory framework like software validation tools will be made “sufficiently flexible” to accommodate advances in AI.

Neural nets spot atypical risk factors

Using AI, researchers are starting to study and measure atypical risk factors that were previously difficult to quantify.

Analysis of retinal images and voice patterns using neural networks could potentially help identify risk of heart disease.

Researchers at Google used a neural network trained on retinal images to find cardiovascular risk factors, according to a paper published in Nature this year.

The research found that not only was it possible to identify risk factors such as age, gender, and smoking patterns through retinal images, it was also “quantifiable to a degree of precision not reported before.”

In another study, Mayo Clinic partnered with Beyond Verbal, an Israeli startup that analyzes acoustic features in voice, to find distinct voice features in patients with coronary artery disease (CAD). The study found 2 voice features that were strongly associated with CAD when subjects were describing an emotional experience.

A recent study from startup Cardiogram suggests “heart rate variability changes driven by diabetes can be detected via consumer, off-the-self wearable heart rate sensors” using deep learning. One algorithmic approach showed 85% accuracy in detecting diabetes from heart rate.

Another emerging application is using blood work to detect cancer. Startups like Freenome are using AI to find patterns in cell-free biomarkers circulating in the blood that could be associated with cancer.

AI’s ability to find patterns will continue to pave the way for new diagnostic methods and identification of previously unknown risk factors.

Apple disrupts clinical trials

Apple is building a clinical research ecosystem around the iPhone and Apple Watch. Data is at the core of AI applications, and Apple can provide medical researchers with two streams of patient health data that were not as easily accessible until now.

Interoperability — the ability to share health information easily across institutions and software systems — is an issue in healthcare, despite efforts to digitize health records.

This is particularly problematic in clinical trials, where matching the right trial with the right patient is a time-consuming and challenging process for both the clinical study team and the patient.

This is particularly problematic in clinical trials, where matching the right trial with the right patient is a time-consuming and challenging process for both the clinical study team and the patient.

For context, there are over 18,000 clinical studies that are currently recruiting patients in the United States alone.

Patients may occasionally get trial recommendations from their doctors if a physician is aware of an ongoing trial.

Otherwise, the onus of scouring through ClinicalTrials.Gov — a comprehensive federal database of past and ongoing clinical trials — falls on the patient.

Apple is changing how information flows in healthcare and is opening up new possibilities for AI, specifically around how clinical study researchers recruit and monitor patients.

Since 2015, Apple has launched two open-source frameworks — ResearchKit and CareKit — to help clinical trials recruit patients and  monitor their health remotely.

monitor their health remotely.

The frameworks allow researchers and developers to create medical apps to monitor people’s daily lives.

For example, researchers at Duke University developed an Autism & Beyond app that uses the iPhone’s front camera and facial recognition algorithms to screen children for autism.

Similarly, nearly 10,000 people use the mPower app, which provides exercises like finger tapping and gait analysis to study patients with Parkinson’s disease who have consented to share their data with the broader research community.

Apple is also working with popular EHR vendors like Cerner and Epic to solve interoperability problems.

In January 2018, Apple announced that iPhone users will now have access to all their electronic health records from participating institutions on their iPhone’s Health app.

Called “Health Records,” the feature is an extension of what AI healthcare startup Gliimpse was working on before it was acquired by Apple in 2016.

“More than 500 doctors and medical researchers have used Apple’s ResearchKit and CareKit software tools for clinical studies involving 3 million participants on conditions ranging from autism and Parkinson’s disease to post-surgical at-home rehabilitation and physical therapy.” — Apple

In an easy-to-use interface, users can find all the information they need on allergies, conditions, immunizations, lab results, medications, procedures, and vitals.

In June, Apple rolled out a Health Records API for developers.

Users can now choose to share their data with third-party applications and medical researchers, opening up new opportunities for disease management and lifestyle monitoring.

The possibilities are seemingly endless when it comes to using AI and machine learning for early diagnosis, driving decisions in drug design, enrolling the right pool of patients for studies, and remotely monitoring patients’ progress throughout studies.

Big pharma’s AI re-branding

With AI biotech startups emerging, traditional pharma companies are looking to AI SaaS startups for innovative solutions.

In May 2018, Pfizer entered into a strategic partnership with XtalPi — an AI startup backed by tech giants like Tencent and Google — to predict pharmaceutical properties of small molecules and develop “computation-based rational drug design.”

But Pfizer is not alone.

Top pharmaceutical companies like Novartis, Sanofi, GlaxoSmithKlein, Amgen, and Merck have all announced partnerships in recent months with AI startups aiming to discover new drug candidates for a range of diseases from oncology and cardiology.

“The biggest opportunity where we are still in the early stage is to use deep learning and artificial intelligence to identify completely new indications, completely new medicines. ” — Bruno Strigini, Former CEO of Novartis Oncology

Interest in the space is driving the number of equity deals to startups: 20 as of Q2’18, equal to all of 2017.

While biotech AI companies like Recursion Pharmaceuticals are investing in both AI and drug R&D, traditional pharma companies are partnering with AI SaaS startups.

Although many of these startups are still in the early stages of funding, they already boast a roster of pharma clients.

There are few measurable metrics of success in the drug formulation phase, but pharma companies are betting millions of dollars on AI algorithms to discover novel therapeutic candidates and transform the drawn-out drug discovery process.

AI has applications beyond the discovery phase of drug development.

In one of the largest M&A deals in artificial intelligence, Roche Holding acquired Flatiron Health for $1.9B in February 2018. Flatiron uses machine learning to mine patient data.

Today, over 2,500 clinicians use Flatiron’s OncoEMR — an electronic medical record software focused on oncology — and over 2 million active patient records are reportedly available for research.

Roche hopes to gather real world evidence (RWE) — analysis of data in electronic medical records and other sources to determine the benefits and risks of drugs — to support its oncology pipeline.

Apart from use by the FDA to monitor post-marketing drug safety, RWE can help design better clinical trials and new treatments in the future.

AI needs doctors

AI companies need medical experts to annotate images to teach algorithms how to identify anomalies. Tech giants and governments are investing heavily in annotation and making the datasets publicly available to other researchers.

Google DeepMind partnered with Moorfield’s Eye Hospital two years ago to explore the use of AI in detecting eye diseases. Recently, DeepMind’s neural networks were able to recommend the correct referral decisions for 50 sight-threatening eye diseases with 94% accuracy.

This was just the Phase 1 of the study. But in order to train the algorithms, DeepMind invested significant time into labeling and cleaning up the database of OCT (Optical Coherence Tomography) scans — used for detection of eye conditions —and making it “AI ready.”

Clinical labeling of the 14,884 scans in the dataset involved various trained ophthalmologists and optometrists who had to review the OCT scans.

Alibaba had a similar story when it decided to venture into AI for diagnostics around 2016.

“The samples needed to be annotated by specialists, because if a sample doesn’t have any annotation we don’t know if this is a healthy person or if it’s a sample from a sick person… This was a pretty important step.” Min Wanli, Alibaba Cloud, told Alizila News

According to Min Wanli, chief machine intelligence scientist for Alibaba Cloud, once the company partnered with health institutions to access the medical imaging data, it had to hire specialists to annotate the imaging samples.

AI unicorn Yitu Technology, which is branching into AI diagnostics, discussed the importance of having a medical team in an interview with the South China Morning Post.

AI unicorn Yitu Technology, which is branching into AI diagnostics, discussed the importance of having a medical team in an interview with the South China Morning Post.

Yitu claims it has a team of 400 doctors working part time to label medical data, adding that higher salary ranges for US doctors may make this an expensive option for US AI startups.

But in the US, government agencies like the National Institute of Health (NIH) are promoting AI research.

The NIH released a dataset of 32,000 lesions annotated and identified in CT images — anonymized from 4,400 patients — in July this year.

Called DeepLesion, the dataset was formed using images marked by radiologists with clinical findings. It is one the largest of its kind, according to the NIH.

Large enough to train a deep neural network, the NIH hopes that the dataset will “enable the scientific community to create a large-scale universal lesion detector with one unified framework.”

Private companies like GE and Siemens are also looking at ways to create large-scale datasets.

GE Healthcare was granted a patent in May discussing machine learning to analyze cell types in microscope images.

The patent proposes an “intuitive interface enabling medical staff (e.g., pathologists, biologists) to annotate and evaluate different cell phenotypes used in the algorithm and the presented through the interface.”

Although other algorithmic approaches have been proposed to make the process less manual, AI currently relies heavily on medical experts for training.

Making annotated datasets available to the public, similar to what DeepMind and NIH are doing, is lowering the barrier to entry for other AI researchers.

China climbs the ranks in healthcare AI

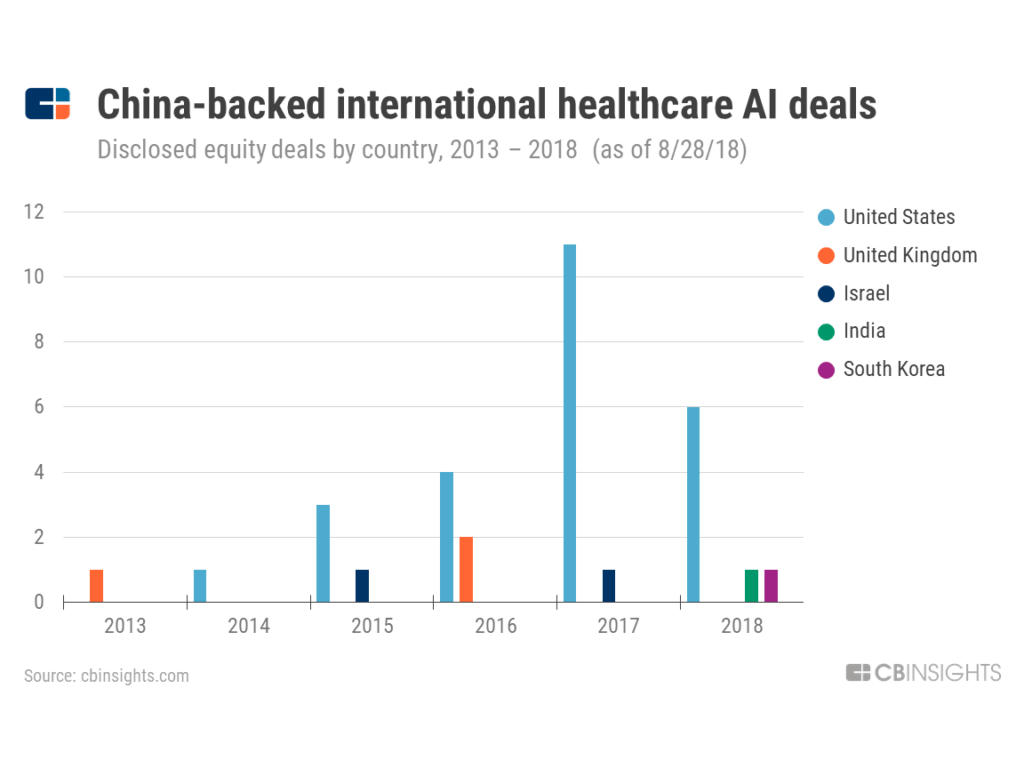

Chinese investors are increasingly investing in startups abroad, the local healthcare AI startup scene is growing, and Chinese tech giants are bringing products from other countries to mainland China through partnerships.

From negligible deal activity just a few years ago, China has quickly climbed the ranks in the global healthcare AI market.

In H1’18, China surpassed the United Kingdom to become the second most active country for healthcare AI deals.

With $72M in funding and investors like Sequoia Capital China, Infervision is the most well-funded Chinese startup focused exclusively on AI solutions for the healthcare industry.

In parallel, Chinese investment in foreign healthcare AI startups is on the rise.

More recently, Fosun Pharmaceutical took a minority stake in US-based Butterfly Network, Tencent Holdings invested in Atomwise, Legend Capital backed Lunit in South Korea, and IDG Capital invested in India-based SigTuple.

The Chinese government issued an artificial intelligence plan last year, with a vision of becoming a global leader in AI research by 2030. Healthcare is one of the 4 areas of focus for the nation’s first wave of AI applications.

The renewed focus on healthcare goes beyond becoming a world leader in AI technology.

The one child policy, though now lifted, has resulted in an aging population: there are over 158M people aged 65+, according to last year’s census. This, coupled with a labor shortage, has shifted the focus to increased automation in healthcare.

China’s efforts to consolidate medical data into one centralized repository started as early as 2016.

The country has issues with messy data and lack of interoperability, similar to the United States.

To address this, the Chinese government has opened several regional health data centers with the goal of consolidating data from national insurance claims, birth and death registries, and electronic health records.

Chinese big tech companies are now entering into healthcare AI with strong backing from the government.

In November 2017, the Chinese Ministry of Science and Technology announced that it will rely on Tencent to lead the way in developing an open AI platform for medical imaging and diagnostics, and Alibaba for smart city development (an umbrella term which would include smart healthcare).

E-commerce giant Alibaba started its healthcare AI focus in 2016 and launched an AI cloud platform called ET Medical Brain. It offers a suite of services, from AI-enabled diagnostics to intelligent scheduling based on a patients medical needs.

Tencent’s biggest strength is that it owns WeChat, the “app for everything.” It is the most popular social media application in China with 1B users, offering everything from messaging and photo sharing to money transfer and ride-hailing.

Around 38,000 medical institutions reportedly had WeChat accounts last year, of which 60% allowed users to register for appointments online. More than 2,000 hospitals accept WeChat payment.

WeChat potentially makes it easy for Tencent to collect huge amounts of patient and medical administrative data.

This year, Tencent partnered with Babylon Health, a UK-based startup developing a virtual healthcare assistant. WeChat users will have access to Babylon’s app, allowing them to message their symptoms and receive feedback and advice.

It also partnered with UK-based Medopad, which brings AI to remote patient monitoring. Medopad has signed over $120M in China trade deals.

Apart from these direct-to-consumer incentives, Tencent is focusing its internal R&D into developing the Miying healthcare AI platform.

Launched in 2017, Miying provides healthcare institutions with AI assistance in the diagnosis of various types of cancers and in health record management.

The initiative appears to be focused on research at this stage, with no immediate plans to charge hospitals for its AI-assisted imaging services.

DIY diagnostics is here

Artificial intelligence is turning the smartphone and consumer wearables into powerful at-home diagnostic tools.

Startup Healthy.io claims it’s making urine analysis as easy as taking a selfie.

Its first product, Dip.io, uses the traditional urinalysis dipstick to monitor a range of urinary infections. Computer vision algorithms analyze the test strips under different lighting conditions and camera quality via a smartphone.

Dip.io, which is already commercially available in Europe and Israel, was recently cleared by the FDA.

Smartphone penetration has increased in the United States in recent years. In parallel, the error rate of image recognition algorithms has dropped significantly, thanks to deep learning.

A combination of the two has opened up new possibilities of using the phone as a diagnostic tool.

For instance, SkinVision uses the smartphone’s camera to monitor skin lesions and assess skin cancer risk. SkinVision raised $7.6M from existing investors Leo Pharma and PHS Capital in July 2018.

The Amsterdam-based company will reportedly use the funding to push for a US launch with FDA clearance.

A number of ML-as-a-service platforms are integrating with FDA-approved home monitoring devices, alerting physicians when there is an abnormality.

One company, Biofourmis, is developing an AI analytics engine that pulls data from FDA-cleared medical wearables and predicts health outcomes for patients.

Israel-based ContinUse Biometrics is developing its own sensing technology. The startup monitors 20+ bio-parameters — including heart rate, glucose levels, and blood pressure — and uses AI to spot abnormal behavior. It raised a $20M Series B in Q1’18.

Apart from generating a rich source of daily data, AI-IoT has the potential to reduce time and costs associated with preventable hospital visits.

AI’s emerging role in value-based care

Artificial intelligence is beginning to play a role in quantifying the quality of service patients receive at hospitals.

A value-based service model is focused on the patient, where healthcare providers are incentivized to provide the highest quality care at the lowest possible cost.

This is in contrast to the fee-for-service model, where providers are paid in proportion to the number of services performed. The more procedures and tests that are prescribed, for example, the higher the financial incentive.

Conversations around quality of healthcare services date back to the 1960s. The challenge has been finding ways to assess healthcare quality with quantifiable, data-driven metrics.

Value-based service models got a fresh breath of life when the Patient Protection and Affordable Care Act was passed in 2010.

Some of the safeguards in place include providing a financial incentive to providers only if they meet quality performance measures, or imposing penalties for hospital-acquired infections and preventable readmission.

The goal of moving towards a value-based care system is to align providers’ incentives with those of the patient and payers. For instance, under the new system, hospitals will have a financial incentive in reducing unnecessary tests prescribed by physicians.

AI startup Qventus claims that Arkansas-based Mercy Hospital, which is shifting to a value-based care system, saw a 40% reduction in unnecessary lab tests in 4 months. The algorithm compared the behavior of physicians prescribing tests — even when they weren’t absolutely necessary — to those with their peers treating patients for the same condition.

Qventus has raised $43M in funding from investors like Bessemer Venture Partners, Mayfield Fund, New York–Presbyterian Hospital, and Norwest Venture Partners. The company has also developed an efficiency index for hospitals.

Georgia-based startup Jvion works with providers like Geisinger, Northwest Medical Specialties, and Onslow Memorial Hospital.

Some of Jvion’s case studies highlight successful use of machine learning in identifying admitted patients who are at risk of readmission within 30 days of hospitalization.

The care team can then use Jvion’s recommendation to educate the patient on daily, preventive measures. The algorithms combine patient health data with data on socioeconomic factors (like income and ease of transportation) and history of non-compliance, among other things, to calculate risk.

Another startup in this space is OM1, which has raised $36M from investors like General Catalyst and 7wire Ventures, focuses on real world evidence to determine efficacy of treatments.

Another approach is for insurance companies to identify at-risk patients and intervene by alerting the care provider.

In Q2’18, Blue Cross Blue Shield Venture Partners invested in startup Lumiata, which uses AI for individualized health spend forecasts. The $11M Series C round saw participation from diverse set of investors, including Intel Capital, Khosla Ventures, and Sandbox Industries.

AI for in-hospital management solutions is still in its nascent stages, but startups are focusing on helping providers to cut costs and improve quality of care.

What therapy bots can and can’t do

From life coaching to cognitive behavioral therapy to faith-based healing, AI therapy bots are cropping up on Facebook messenger.

High costs of mental health therapy and the appeal of round-the-clock availability is giving rise to a new era of AI-based mental health bots.

Early-stage startups are focused on using cognitive behavioral therapy — changing negative thoughts and behaviors — as a conversational extension of the many mood tracking and digital diary wellness apps in the market.

Woebot, which raised $8M from NEA, comes with a clear disclaimer that it’s not a replacement for traditional therapy or human interaction.

Another company, Wyse, raised $1.7M last year, and is available on iTunes as an “anxiety and depression” bot.

Startup X2 AI claims that its AI bot Tess has over 4 million paid users. It has also developed a “faith-based” chatbot, “Sister Hope,” which starts a conversation with a clear disclaimer and privacy terms (on messenger, chats are subject to FB privacy policy, and contents of conversations are visible to Facebook).

But accessibility to Facebook and a lack of regulations makes verification of some bots and their privacy terms difficult.

Users also have access to sponsored “AI” messenger bots and interactions that appear to be a string of pre-scripted messages with little to no contextual cues.

In narrow tasks like image recognition and language processing and generation, AI has come a long way.

But, as pioneering deep learning researcher Yoshua Bengio said in a recent podcast on The AI Element, “[AI] is like an idiot savant” with no notion of psychology, what a human being is, or how it all works.

Mental health is a spectrum, with high variability in symptoms and subjectivity in analysis.

In its current state, AI can do little beyond regular check-ins and fostering a sense of “companionship” with human-like language generation. For people who need more than a nudge to reconstruct negative sentences, the current generation of bots could fall short.

But our brains are wired to believe we are interacting with a human when chatting with bots, as one article in Psychology Today explains, without the complexity of having to decipher non-verbal cues.

This could be particularly problematic for more complex mental health issues, potentially creating a dependency on bots and quick-fix solutions that are incapable of in-depth analysis or the ability to address the underlying cause.

Jobs considered safest from automation are ones requiring a high level of emotional cognition and human-to-human interaction. This makes mental healthcare — despite the upside of cost and accessibility — a particularly hard task for AI.